Optimal Survey Length: Impact of survey length on data quality.

PS: Special thanks to Kahyin Toh, a researcher with Milieu, who helped with the data analyses!

Effective survey design is a mix of art and science, and it’s a process that requires the designer to consider a wide range of factors, from how the questions are worded, to how much cognitive load the questions (or questionnaire) impose.

Questionnaire length is also a crucial factor, as a long survey can lead to respondent fatigue, which can result in higher dropout rates and poor data quality. At Milieu, we’ve worked with many clients who come to us with long surveys, as there's often a desire to try and pack everything into a survey, and leave no stone unturned. In fact, if you participate in most online research panels, it's not uncommon to see surveys that take more than 30 minutes to complete.

Running long survey engagements isn't unheard of. When doing qual research, such as an interview or focus group, it’s actually common to run an hour-long session with a participant. But this simply doesn’t work for online research. When participants take surveys online, either via a browser or mobile app, they’re in a different mindset. Sitting across and speaking to a human for 45 minutes to 1 hour is a wildly different experience compared to sitting in front of a screen for the same amount of time checking boxes. And so, in the world of market research, you need to be thinking about study design differently when doing quant vs qual.

At Milieu, we care a lot about the experience of our community when they participate in research online. And we've also known that running long surveys negatively impacts data quality (which is why we have a hard cap at 10 minutes for survey length). But we never got around to actually putting this to the test and getting some hard data, until now!

We decided to run a series of controlled tests on our own panel to explore the relationship between survey length and data quality.

If you're short on time, here's the TL;DR, 10 minutes is the breaking point. Anything longer than this in an online survey setting and you will start to see response quality deteriorate in the following ways :

1. Randomisation of responses : Likelihood of respondent picking a random response (instead of careful consideration) doubles

2. Poorer quality of answers in open-ended questions : An increase in gibberish answers, non-committal answers (e.g., NIL, no comments), and decrease in length of responses

3. Neutral responses : Increased likelihood in picking a "neutral" response option

4. Lower respondent satisfaction : Sharp decline in survey satisfaction ratings by respondents

If you're keen to learn about how we assessed and analysed each of the above, read on!

A novel approach to an age-old question

The question around optimal survey length isn’t a new one in the market research industry. Many studies on this topic, such as this one, have been conducted over the years. However, many of these experiments have focused on the link between survey length and drop-off rate (i.e. respondents who didn't complete the survey). What we feel is missing is an exploration of the relationship between survey length and actual data quality.

Why does this matter?

Focusing on drop-off rates overlooks the potential data quality issues of long surveys for those who didn't drop off. It's common to offer incentives to encourage participation in a survey, and depending on how attractive the incentive is, the participant may be highly motivated to complete a survey regardless of the effort they put in. This issue is particularly relevant to online panel companies (including Milieu) as the core value exchange is typically rewards (via a redeemable points system) in exchange for survey participation. And so if the participants are motivated to complete a survey to get their points, we need to be taking a closer look at the relationship between survey length and response quality.

So we designed an approach to test response quality across a few variables at different questionnaire lengths.

How did we define Survey Length & Quality of Responses?

The length of a survey was defined as the total number of questions a respondent was required to answer.

Quality of response was quantified using the 4 criteria below:

1. Attention Check / Response Randomization : Tracking respondent attention levels via random attention check questions and measuring whether attention check failure increases as the length of survey increases.

2. Quality of open-end responses: Tracking the quality of open-ended (OE) responses (i.e. rate of non-participation, rate of gibberish responses, etc) and measuring whether there is an increase in bad OEs as the length of survey increases.

3. Acquiescence bias: Tracking whether respondents are more likely to agree with a statement-based question as the survey length increases.

4. Survey Satisfaction: Tracking respondent satisfaction with the survey and measuring whether this decreases as the length of survey increases.

Study Design

To assess how the above four metrics vary with increasing survey lengths, we designed four surveys with varying questionnaire lengths of 10 questions, 20 questions, 50 questions, and 60 questions. We designed each survey to follow a consistent sequence of questions, which was accomplished by using a standard brand health survey. Brand health studies ask questions such as brand awareness, brand familiarity, etc for a set of brands in a specific category/sector. Our brand health study includes 10 core questions, shown below. And we repeat the brand sequence for each questionnaire length variant.

1. Awareness. Which of these [brand category] brands, if any, have you heard of?

2. Familiarity. Which of these [brand category] brands, if any, are you familiar with?

3. Ever usage. Which of these [brand category] brands, if any, have you ever purchased?

4. Past usage. Which of these [brand category] brands, if any, have you ever purchased in the last three months?

5. Positive impression. Which of these [brand category] brands, if any, do you have a positive impression of?

6. Negative impression. Which of these [brand category] brands, if any, do you have a negative impression of?

7. Consideration. Which of these [brand category] brands, if any, would you consider purchasing from in the future?

8. Recommendation. Which of these [brand category] brands, if any, would you recommend to your family and friends?

9. OE Recommendation OR Not recommendation reason. Why would you recommend [brand name] to your friends or family? OR Why would you NOT recommend [brand name] to your friends or family?

10. Attitude statement agreement. To what extent do you agree with the statement: "I am not particularly loyal to brands and switch brands easily."

So, for example, the 10 question variant includes 1 sector/sequence, and the 20 question survey included 2 sectors/sequences, etc. This design allowed us to increase the survey length while ensuring a consistent experience for the respondent.

Each survey was launched with at least N = 3000 respondents to ensure a robust sample size with a low margin of error of +/-2 or lower. Note that the brand categories were all unique.

Before we proceed, it's important to highlight how the number of questions in a survey correlates to time. Based on our data, a respondent on the Milieu panel typically answers up to 5 questions per minute. This can change depending on the subject matter and composition of the survey. But on average, most surveys run on our panel adhere to the 5 questions / minute benchmark. Using this standard, here's how number of questions translates into time taken:

1. 10 questions - 2 minutes

2. 20 questions - 4 minutes

3. 50 questions - 10 minutes

4. 60 questions - 12 minutes

It's important to note that the questions-to-time-taken conversion won't be the same across every survey platform. In fact, the industry standard is usually closer to 3 questions per minute. But because Milieu is a mobile-only platform, plus our survey UI is highly controlled and we avoid question formats that impose a high cognitive load (such as matrix/grid questions), respondents on our platform tend to answer more questions per minute. In terms of how you interpret the results, we suggest you focus on the time taken, rather than the number of questions.

How we analysed the data and what we learned

1. Attention Check / Response Randomization

To assess whether respondents were randomising their responses, we inserted an attention check question towards the end of each survey variant. Below is an example attention check question :

Which of the following brands, if any, starts with the letter P?

(This is a multi-select question with a randomised response list)

- Adidas

- Nike

- Reebok

- Puma (Correct Answer)

- Saucony

- ASICS

- None of the above

The attention checks were configured as a multi-select question where respondents could select multiple answers, but each attention check consisted of only 1 correct answer. The attention check question was also designed to match the brand category of the current sequence / sector.

Respondents who selected ONLY the correct answer option were classified as PASS. Respondents who selected a wrong answer option OR multiple response options (even though one of them may have been correct) were classified as FAIL.

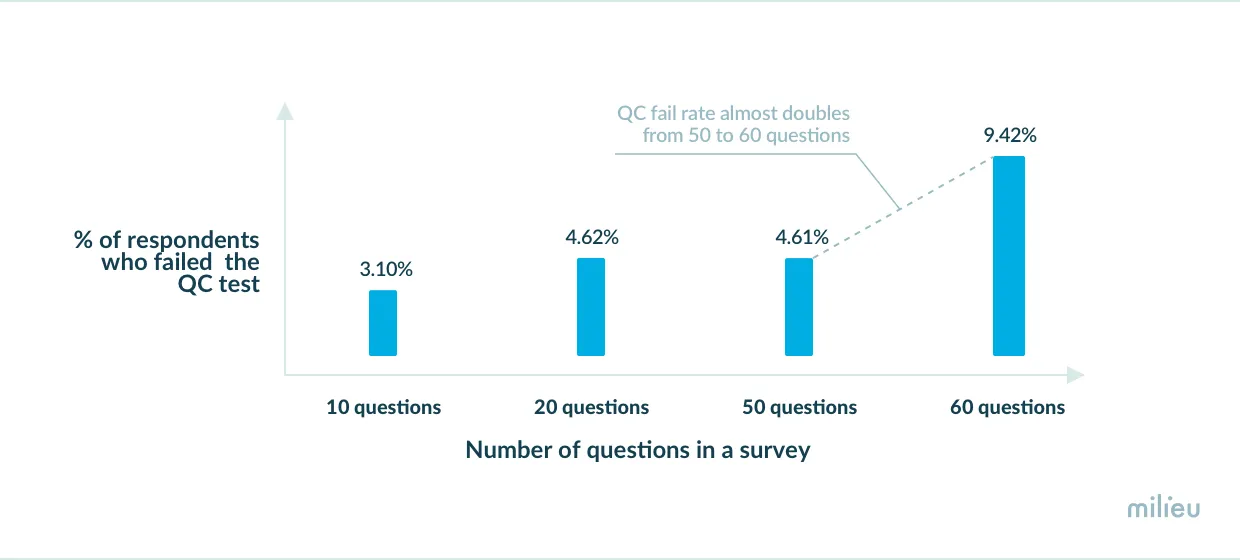

The chart below shows the attention check fail rate across the 4 survey length variants.

Figure 2: Relationship between survey length (number of questions) and response accuracy (% of respondents who failed the attention check question).

The analyses revealed that the fail rate increased marginally from 3.10% for 10 questions to 4.6% for 20 questions. Additionally, going from 20 questions to 50 questions did not lead to a material increase in the rate of attention check failure. However, the fail rate almost doubled going from 50 questions (4.6%) to 60 questions (9.42%) suggesting a considerable decrease in attention levels when you break through 50 questions, or 10 minutes.

2. Quality of open-end responses

We also wanted to understand how the length of a survey affects the quality of open-end responses. To measure this, we added an open-end question at the end of every survey length questionnaire where respondents were free to type in whatever they like. We analysed the open-ends in the following manner :

1. Number of gibberish/nonsensical responses. These are answers that make no sense and serve no purpose to the question asked for instance, “ffffffff”, “asdfss”, etc.

2. Number of non-committal responses i.e. NAs/Nil/No comment/IDK.

3. Mean number of characters. This is to assess the length of their responses.

The analyses revealed that there was an increase in the proportion of gibberish responses beyond 20 questions with the rate increasing from 0.5% to 0.8% for 50 questions and to 1.1% for 60 questions. What does this mean? In a survey with N = 1000 respondents, you would have to discard N = 10 responses because they typed in unusable responses. While this is relatively low and can be removed from the analyses without a huge impact on the analysis, we were more curious about respondents who may provide non-committal answers i.e. the idks/NAs/No comment/IDK.

When it comes to non-committal responses, going from 10 questions to 20 questions sees a decrease in non-committal responses. However, there is a sharp increase in non-committal responses when the survey length increases to 50 questions (13.9%) and 60 questions (15.7%). What does this mean? In a 60 question survey with N = 1000 responses, N = 157 respondents resorted to a “no opinion” bias which suggests that respondent fatigue is setting in. This means that respondents who may have been giving you good data earlier in the survey are now taking the path of least effort (also known as satisficing) by keying in “idk” / “nil” / “na” instead of providing relevant open-end responses specific to the question. This is not ideal since you may lose out on valuable insight.

Last, we analysed the mean number of characters respondents typed in for open-end responses. The results indicated that going from 50 questions to 60 questions led to a drop in the mean number of characters suggesting that increasing length of a survey can lead to less detailed responses to open-ended questions.

3. Acquiescence Bias

We hypothesised that the more questions you ask in a survey the greater the tendency to “agree” with statement questions because there is a general tendency to appear “agreeable” in surveys, and with increased cognitive load the temptation to “agree” with a statement without thinking through it is perhaps greater.

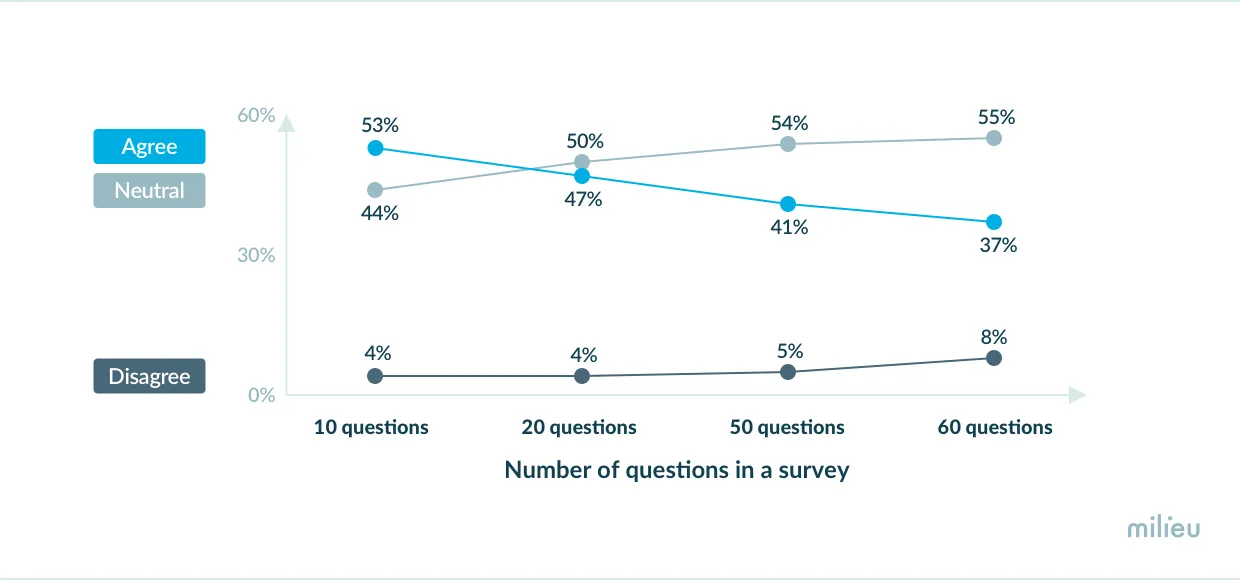

While the results didn’t validate this exact mechanism, we observed an interesting effect of survey length on the response pattern. What we observed was, as you increase the number of questions, respondents are more likely to select a “neutral” response option.

Respondents were asked the question “To what extent do you agree with the statement: I am not particularly loyal to brands and switch brands easily?" at the end of each survey. With increasing survey length, there was a gradual decrease in agreement with the statement dropping from 53% for the 10 question survey to 37% for the 60 question survey. In contrast, selection of a neutral response option increased from 44% for the 10 question survey to 55% for the 60 question survey.

The shift from a positive response to a non-committal, neutral response may be indicative of respondent fatigue. With declining attention levels towards the question content or reduced motivation to provide their “true” opinion, respondents may be tempted to go with neutral response options to reduce cognitive effort. This in turn compromises data quality.

4. Survey Satisfaction Rating

Last, we asked respondents to rate their satisfaction with the survey at the end of each questionnaire. Who better than the respondents themselves to provide feedback on the survey taking experience for different survey lengths? The results revealed that while the satisfaction score was 91% for the 10 question survey and 88% for 20 questions, this plummeted to 56% and 57% for 50 questions and 60 questions, respectively. This explicit measure of a respondent's survey taking experience underscores the negative effect a long survey can have on the mood and psychology of respondents which in turn can affect how well-thought-out their responses are.

Conclusion

As most of the existing literature and experiments on this topic have focused on the correlation between survey length and drop-off rate, we set out to conduct an experiment that went deeper into the relationship between length and response quality. And we found some very compelling results across a number of variables, from respondent attention levels to overall survey satisfaction.

In the end, we found that 10 minutes (which is, on average, around 50 questions on our platform) is the clear breaking point when you start to lose panelists attention and respondent fatigue sets in.

So when you're launching your next online survey, whether it's on your own using a survey tool like Google Forms, or using a professional panel provider, follow the 10 minute rule! Otherwise you may be putting your data at risk.

Hope you enjoyed this article, and thanks for reading!

.avif)